Deepfakes fuel spread of health misinformation online

JournalismPakistan.com | Published 1 hour ago | JP Global Monitoring Desk

Join our WhatsApp channel

Investigations show AI deepfakes impersonating doctors are spreading health misinformation on social platforms, prompting warnings for regulators, platforms, and newsrooms to strengthen verification and public education.Summary

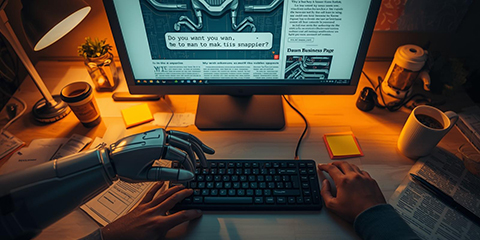

LONDON — Journalists and public health investigators are increasingly documenting the spread of AI-generated deepfake videos that impersonate doctors and medical professionals to promote unproven supplements and risky treatments on social media platforms, particularly TikTok.

Reports published by The Guardian detail how synthetic videos mimic the appearance and voices of real clinicians, lending false credibility to health claims that lack scientific backing. Researchers and policy groups warn that repeated exposure to such content increases public susceptibility to false medical information.

Rising use of synthetic medical impersonation

The documented cases involve generative AI tools used to fabricate realistic videos of medical figures endorsing products ranging from dietary supplements to unapproved therapies. In several instances, the individuals being impersonated were unaware their likenesses were being used, highlighting gaps in consent and enforcement.

Public health experts say these tactics exploit trust in doctors and local clinicians, making misinformation harder for audiences to detect. Unlike traditional false claims, deepfakes add a visual and emotional layer that can override skepticism, especially on short-form video platforms optimized for rapid sharing.

Platform and newsroom challenges

Technology companies have policies against impersonation and medical misinformation, but investigators note that enforcement often lags behind the speed at which synthetic content is produced and distributed. Advocacy groups are urging platforms to improve detection tools and faster takedown processes.

For newsrooms, the trend is prompting reassessments of verification workflows. Health and verification desks are being encouraged to adopt forensic checks for AI artifacts, expand access to trusted medical experts for rapid authentication, and collaborate more closely with civil society groups tracking harmful synthetic media.

The Guardian and other media outlets emphasize that addressing the issue will require coordinated responses involving platforms, regulators, journalists, and public health authorities, alongside broader public education on recognizing AI-generated content.

KEY POINTS:

- AI-generated deepfakes are impersonating doctors to promote unproven health products

- Investigations show social media platforms are key distribution channels

- Experts warn repeated exposure increases vulnerability to false medical claims

- Newsrooms are urged to strengthen AI verification and forensic checks

- Platforms face pressure to improve detection and enforcement mechanisms

ATTRIBUTION: Reporting based on coverage and findings published by The Guardian and cited public health and policy research groups.